Machine learning and AI

We develop AI-powered applications and ML pipelines using state-of-the-art models, frameworks, and MLOps best practices to deliver intelligent software solutions.

Technical realities in AI

95% of enterprise generative AI pilots fail to deliver a measurable business impact, primarily due to the accumulation of technical debt, inadequate infrastructure design, and insufficient operational frameworks. The transition from proof-of-concept to production-ready systems represents the most critical technical challenge facing CTOs today.

Only 20% of organizations successfully scale AI across multiple departments.

Critical Technical Challenges

Hidden technical debt in ML systems

Machine learning systems accumulate technical debt through complex data dependencies, entanglement, and configuration complexity. Unlike traditional software debt, ML technical debt affects system boundaries, making changes exponentially more expensive over time.

Architectural anti-patterns we discover and fix

- Pipeline jungles: Complex preprocessing pipelines that become unmaintainable.

- Dead experimental codepaths: Unused feature extraction logic increases complexity.

- Glue code: Ad-hoc integration between ML libraries and business logic.

- Configuration debt: Complex parameter management without proper versioning.

Out technical solutions

- We implement automated technical debt detection.

- Deploy AI-powered refactoring systems that analyze code patterns and optimize performance.

- Enforce modular architecture patterns with clear separation of concerns.

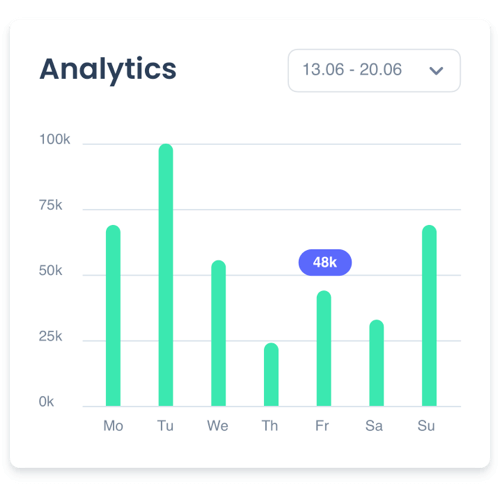

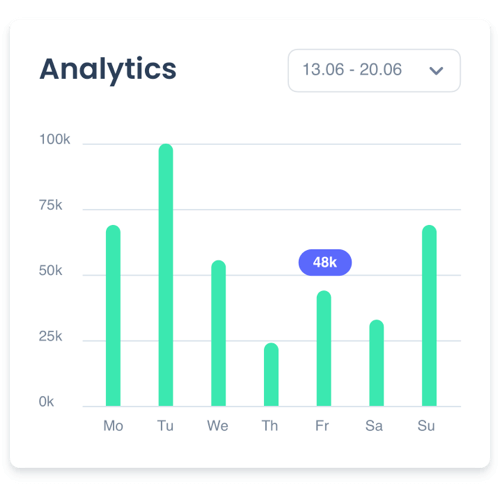

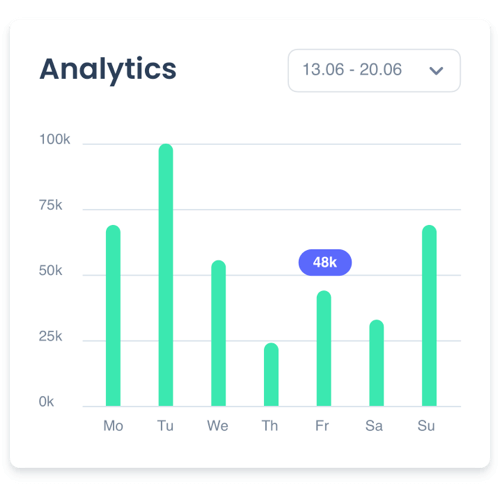

Production model monitoring & drift detection

Model drift affects 93% of deployed systems, causing silent failures that degrade business performance without apparent symptoms. Traditional monitoring approaches are insufficient for ML systems due to their stochastic nature.

How we monitor architecture components

- Statistical drift detection: KL-divergence, Energy Distance, Population Stability Index (PSI).

- Performance-based monitoring: real-time accuracy, precision, and recall tracking.

- Feature distribution analysis: automated comparison against training baselines.

- Automated retraining triggers: model refresh based on the severity of drift.

MLOps architecture patterns

We help organizations progress from ad-hoc experiments to reliable, scalable AI by guiding them through a pragmatic MLOps maturity journey—from Minimum Viable ML to Production MLOps and ultimately Enterprise Scale.

Minimum Viable ML infrastructure

- Manual model training in Jupyter notebooks

- Basic model export and deployment

- Single-product, single-model architecture

- Suitable for proof-of-concept validation

Production MLOps

- Automated training pipelines

- Model versioning and A/B testing capability

- Performance monitoring and alerting

- Single-product, multi-model support

Enterprise MLOps

- Multi-product, multi-model orchestration

- Centralized feature stores and data governance

- Advanced monitoring and compliance frameworks

- Cross-team collaboration and resource sharing

Feature store architecture

Feature stores address the fundamental challenge of feature consistency between training and serving environments, eliminating training-serving skew that affects 78% of production ML systems.

Core components of how we tackle these issues

- Feature registry: Centralized metadata and lineage tracking.

- Offline store: Historical feature values for training.

- Online store: Low-latency feature serving.

- Computation engine: Transform raw data into features.

Model versioning & CI/CD integration

Effective model versioning requires handling significant binary artifacts (models), complex dependencies, and reproducible training environments.

For our clients, we usually deploy the following:

- Git-based code versioning for training scripts and configurations.

- DVC/MLflow for model artifacts versioning and metadata tracking.

- Docker containerization for environment consistency.

- Automated testing pipelines for model validation.

In ML/AI, we frequently rely on

Our MLOps and AI application delivery pipelines are built on top of a modern Kubernetes foundation. Kubernetes provides the scalability, resilience, and modularity required to transition from proof-of-concept to enterprise-scale AI systems.

Seldon

Kuberflow

Nvidia Operator

AI services we offer

AI strategy & readiness

- What we do: Use-case discovery, ROI modeling, risk & compliance baseline (GDPR, SOC2), data/infra gap assessment.

- Deliverables: 6–12-month roadmap, value cases, governance guardrails, TCO & FinOps plan.

Data foundations for AI

What we do

Data quality profiling, lineage, feature standardization, schema governance, and synthetic data where appropriate.

Deliverables

Feature contracts, data SLAs, catalog/registry, training/serving schemas to prevent skew.

Model development

What we do

Classical ML & deep learning, multimodal perception, predictive analytics, legal/contract AI.

Deliverables

Reproducible notebooks/pipelines, baselines + lift, inference services.

Feature store architecture

What we do

Registry, offline/online stores, transformations, low-latency serving.

Deliverables

Reference implementation, SLAs for freshness/latency, governance policies.

MLOps engineering

What we do

CI/CD for models, artifact/versioning (DVC/MLflow), A/B & canary, drift detection, observability.

Deliverables

GitOps repositories, model registry, monitoring dashboards, and auto-retrain triggers.

Edge AI & IoT

What we do

On-device/near-edge inference, micro-K8s at the edge, device identity & OAuth, self-healing meshes.

Deliverables

Edge deployment blueprints, OTA update system, secure device onboarding.

Real-time & streaming AI

What we do

Exactly-once stateful stream processing, online inference, IoT telemetry at 10ms-class latency.

Deliverables

Stateful pipelines with incremental checkpoints, autoscaling, and back-pressure controls.

Ready to modernize your AI infrastructure?

Let’s discuss how our Kubernetes-first MLOps architectures and AI development services can accelerate your transition from experimental pilots to scalable, production-grade AI systems.