From fragmented reporting to connected, trustworthy news

How our intelligent news aggregation platform unified global coverage, strengthened topic continuity across publications, and delivered expert-verified insights at scale.

Problem

News on the same affairs is scattered and disconnected

This fragmentation prevents analysts, journalists, and researchers from obtaining a holistic view. Without a unified stream of coverage, identifying trends or narrative shifts becomes a manual process that can take several hours per topic, resulting in missed insights and slower reporting cycles.

Publications are often unverified, and expert validation is slow and tedious

Verification requires expert intervention, but manual fact-checking is slow: domain specialists spend an average of 38–55 minutes validating a single article, and cross-referencing sources across outlets can multiply this workload. This bottleneck reduces trust, delays decisions, and leaves both professionals and everyday readers uncertain about which sources to rely on.

Solution

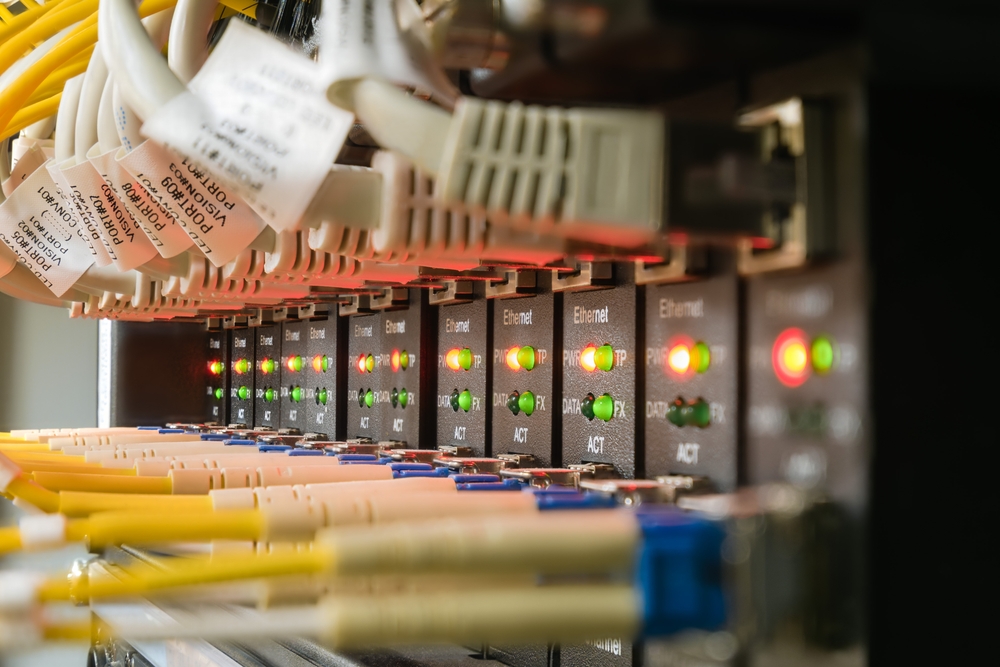

We engineered a high-performance news intelligence platform built around a custom Spark-based streaming web crawler that outperformed existing open-source solutions by 6×, enabling continuous ingestion of millions of articles per hour with predictable latency. This streaming architecture allowed us to normalize content in real time, maintain topic continuity across sources, and eliminate the fragmentation that traditionally slows analysis.

At the end of each crawl cycle, we integrated an advanced MLOps pipeline powering 11 interconnected machine learning models, including sentiment analysis engines, topic classifiers, NLP processors, and LLM-driven semantic linking algorithms. These models work together to group related articles, extract relationships, surface contradictory claims, and automatically generate structured summaries experts can trust.

The entire system runs on Kubernetes, leveraging auto-scaling and microservice-based processing pipelines to ensure consistent throughput even during significant global news spikes. This architecture provides fault isolation, predictable performance, and seamless horizontal scaling, allowing the platform to handle high-volume events without disruption.

Together, these technologies deliver a unified, trustworthy layer of intelligence where readers instantly see verified coverage across sources, analysts gain consolidated storylines, and experts spend 80–90% less time validating information.

Industry

8 months

Timeline

Location

Engagement model

Project management model

Business impact

Developed a multi-modal verification model that improved ground-truth detection accuracy by 8% compared to state-of-the-art academic and commercial baselines, dramatically increasing trust in aggregated news signals.

Reduced manual expert review cycles by 95%, transforming a traditionally hours-long validation workflow into near-instant, model-assisted confirmation. Analysts and editors could reallocate their time from repetitive fact-checking to high-value investigative work.

Articles Unified per Topic

Delivered automatic aggregation of related articles into a single coherent topic, enabling users to follow complex affairs seamlessly across publications. This eliminated fragmentation and provided a structured, chronological, and context-rich view of evolving stories.

“For the first time, I can follow an entire story from every angle without drowning in disconnected articles.”

Compliance standards implemented

- GDPR

- ISO 27001

Our solutions comply with GDPR and ISO 27001, trusted by clients across regulated industries.

Key technological challenges

Enabling fast model upgrades in an evolving MLOps landscape

Supporting 11 interconnected ML models, including NLP components, sentiment engines, topic classifiers, and LLM-based semantic linkers, required an MLOps pipeline capable of rapid iteration. However, the ecosystem for continuous ML delivery was still far from mature. Ensuring safe, fast, and reproducible model upgrades without disrupting a live high-throughput news pipeline was a core challenge.

We solved this by designing isolated model stages, strict versioned artifacts, and automated validation gates, enabling us to deploy new models or refine existing ones within hours rather than weeks while maintaining end-to-end consistency.

Adapting a streaming crawler to operate across multilingual environments

Building a Spark-based streaming crawler that runs 6× faster than open-source alternatives required deep architectural optimization, but scaling it across multiple languages added another layer of complexity. Each language introduces unique tokenization rules, content structures, encoding issues, and irregular publication formats.

We addressed this by implementing adaptive parsing layers, language-specific normalization rules, and deployment profiles tailored to regional content patterns. This allowed the crawler to deliver stable performance and accurate extraction across diverse linguistic ecosystems.

Implementation

Building a streaming-first news ingestion pipeline

To support real-time aggregation of thousands of articles per hour, we implemented a Spark-based streaming ingestion layer that continuously crawls, normalizes, and enriches news content. Instead of relying on batch crawls, the platform runs a custom Scala + Spark Structured Streaming crawler that pulls from diverse sources (RSS, sitemaps, APIs, and HTML pages) and processes them in micro-batches with predictable latency.

The crawler was engineered to outperform existing open-source implementations by a factor of 6×, thanks to:

- Highly parallelized fetch and parse stages

- Adaptive backoff and rate limiting per domain

- Incremental deduplication and change detection at the article level

- Content fingerprinting to avoid reprocessing near-duplicates

Ingested articles are pushed into a Kubernetes-hosted microservice pipeline, where each service handles a well-defined stage: parsing, normalization, language detection, metadata enrichment, and model inference. This separation ensures that spikes in traffic for a single story do not overload the entire system, but only the relevant stages scale up.

Orchestrating 11 ML models at the end of the crawl cycle

As early adopters of advanced MLOps, we connected 11 ML models to the ingestion flow, including:

- Sentiment analysis models for per-article and per-topic sentiment

- NLP pipelines for entity extraction, topic classification, and relation detection

- LLM-based (such as BERT) models for semantic linking, contradiction detection, and abstractive summarization

We built a model-serving layer that exposes each model behind a stable API, backed by:

- Versioned model artifacts and configuration manifests

- Canary-style rollouts for new model versions

- Automated regression checks on curated evaluation sets

- Shadow deployments to compare new vs. current models in production without risk

This allows the platform to upgrade or replace models within 50 minutes, while keeping the rest of the system unchanged. Feature computation, inference, and post-processing are fully observable, with metrics feeding into dashboards and alerts to detect drift, performance regressions, or anomalous outputs early.

The engine behind our client's breakthrough

Some of the more than 72 technologies we use.

Apache Spark

Kubernetes

Java

Scala

Python

Pytorch

Kafka

GPTs

Elasticsearch

Angular

FluxCD

The Enliven Systems advantage

Enliven Systems helps ambitious companies turn data into a competitive advantage through cutting-edge AI engineering, research, and cloud optimization.

Distinguished talent pool

Predictable delivery

Experienced researchers

Success in a broad spectrum of applications

Let's build your next success story

Take the first step to transform your data into intelligence that drives impact:

- Consolidate global news into coherent, story-driven topics with 6× faster ingestion and enrichment

- Boost analyst and editorial productivity by cutting review cycles by 95%

- Expand effortlessly into new regions with language-adaptive crawling and deployment

- Ensure long-term reliability with our scalable, Kubernetes-native microservice architecture

- Grow confidently with future-proof MLOps pipelines that support rapid model upgrades and zero vendor-lock